In one case he had a single GeoTIFF file with overviews built by GDAL's gdaladdo utility in a separate .ovr file. Only one MapServer LAYER was used to refer to this image. In the second case he used a distinct TIFF file for each power-of-two overview level, and a distinct LAYER with minscale/maxscale settings for each file. So in the first case it was up to GDAL to select the optimum overview level out of a merged file, while in the second case MapServer decided which overview to use via the layer scale selection logic.

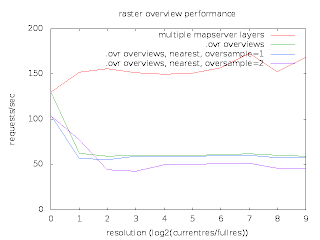

He was seeing performance using the MapServer multi-layer approach being about twice what it was with letting GDAL select the right overview. He prepared a graph and scripts with everything I needed to reproduce his results.

I was able to reproduce similar results at my end, and set to work trying to analyse what was going on. The scripts used apache-bench to run MapServer in it's usual cgi-bin incarnation. This is a typical use case, and in aggregate shows the performance. However, my usual technique for investigating performance bottlenecks is very low-tech. I do a long run demonstrating the issue in gdb, and hit cntl-c frequently, and use "where" to examine what is going on. If the bottleneck is dramatic enough this is usually informative. But I could not apply this to the very very short running cgi-bin processes.

So I set out to establish a similar work load in a single long running MapScript application. In doing so I discovered a few things. First, that there was no way to easily load the OWSRequest parameters from an url except through the QUERY_STRING environment variable. So I extended mapscript/swiginc/owsrequest.i to have a loadParamsFromURL() method. My test script then looked like:

import mapscript

map = mapscript.mapObj( 'cnes.map')

mapscript.msIO_installStdoutToBuffer()

for i in range(1000):

req = mapscript.OWSRequest()

req.loadParamsFromURL( 'LAYERS=truemarble-gdal&FORMAT=image/jpeg&SERVICE=WMS&VERSION=1.1.1&REQUEST=GetMap&STYLES=&EXCEPTIONS=application/vnd.ogc.se_inimage&SRS=EPSG%3A900913&BBOX=1663269.7343875,1203424.5723063,1673053.6740063,1213208.511925&WIDTH=256&HEIGHT=256')

map.OWSDispatch( req )

The second thing I learned is that Thomas' recent work to directly use libjpeg and libpng for output in MapServer had not honoured the msIO_ IO redirection mechanism needed for the above. I fixed that too.

This gave me a proces that would run for a while and that I could debug with gdb. A sampling of "what is going on" showed that much of the time was being spent loading TIFF tags from directories - particularly the tile offset and tile size tag values.

The base file used is 130000 x 150000 pixels, and is internally broken up into nearly 900000 256x256 tiles. Any map request would only use a few tiles but the array of pointers to tiles and their sizes for just the base image amounted to approximately 14MB. So in order to get about 100K of imagery out of the files we were also reading at least 14MB of tile index/sizes.

The GDAL overview case was worse than the MapServer overview case because when opening the file GDAL scans through all the overview directories to identify what overviews are available. This means we have to load the tile offset/size values for all the overviews regardless of whether we will use them later. When the offset/size values are read to scan a directory they are subsequently discarded when the next directory is read. So in cases where we need to come back to a particular overview level we still have to reload the offsets and sizes.

For several years I have had some concerns about the efficiency of files with large tile offset/size arrays, and with the cost of jumping back and forth between different overviews with GDAL. This case highlighted the issue. It also suggested an obvious optimization - to avoid loading the tile offset and size values until we actually need them. If we access an image directory (ie overview level) to get general information about it such as the size, but we don't actually need imagery we could completely skip reading the offsets and sizes.

So I set about implementing this in libtiff. It is helpful being a core maintainer of libtiff as well as GDAL and Mapserver. :-) The change was a bit messy, and also it seemed a bit risky due to how libtiff handled directory tags. So I treat the change as as an experimental (default off) build time option controlled by the new libtiff configure option --enable-defer-strile-load.

With the change in place, I now get comparable results using the two different overview strategies. Yipee! Pleased with this result I have commited the changes in libtiff CVS head, and pulled them downstream into the "built in" copy of libtiff in GDAL.

However, it occurs to me that there is still an unfortunate amount of work being done to load the tile offset/size vectors when doing full resolution image accesses for MapServer. A format that computed the tile offset and size instead of storing all the offsets and sizes explicitly might do noticeable better for this use case. In fact, Erdas Imagine (with a .ige spill file) is such a format. Perhaps tonight I can compare to that and contemplate a specialized form of uncompressed TIFF files which doesn't need to store and load the tile offset/sizes.

I would like to thank Thomas Bonfort for identifying this issue, and providing such an easy to use set of scripts to reproduce and demonstrate the problem. I would also like to thank MapGears and USACE for supporting my time on this and other MapServer raster problems.

No comments:

Post a Comment